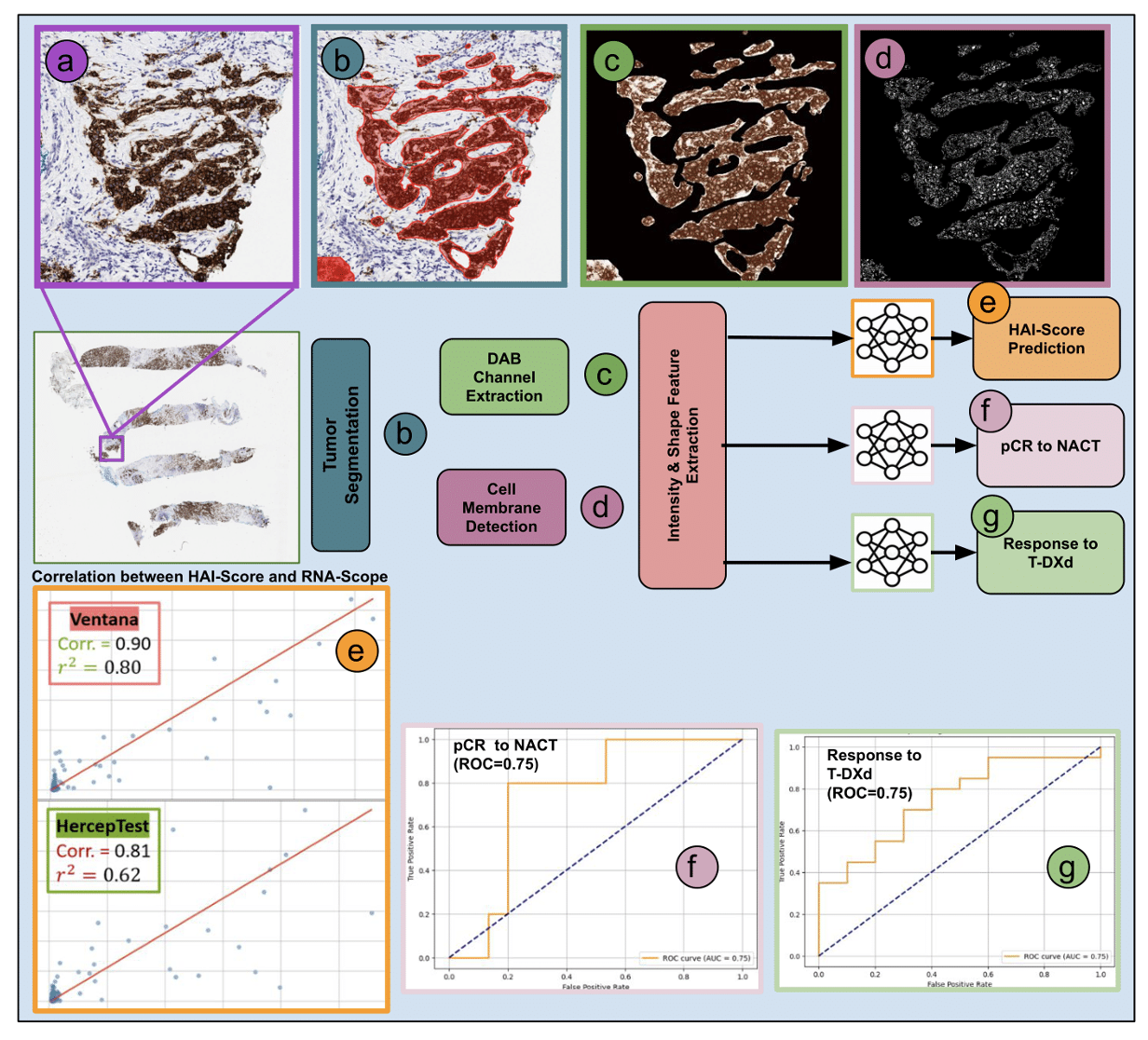

HAI-score: An Objective AI Method for Accurate HER2 H-score Estimation in Breast Cancer

Published in ASCO Conference, 2025, 2025

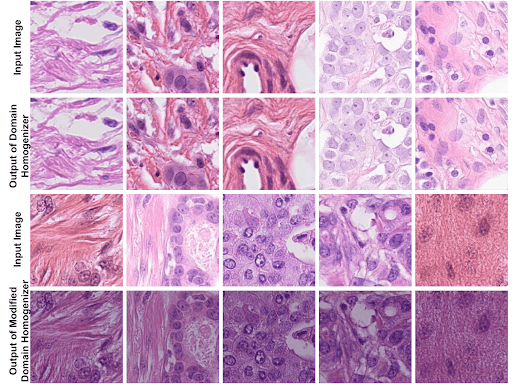

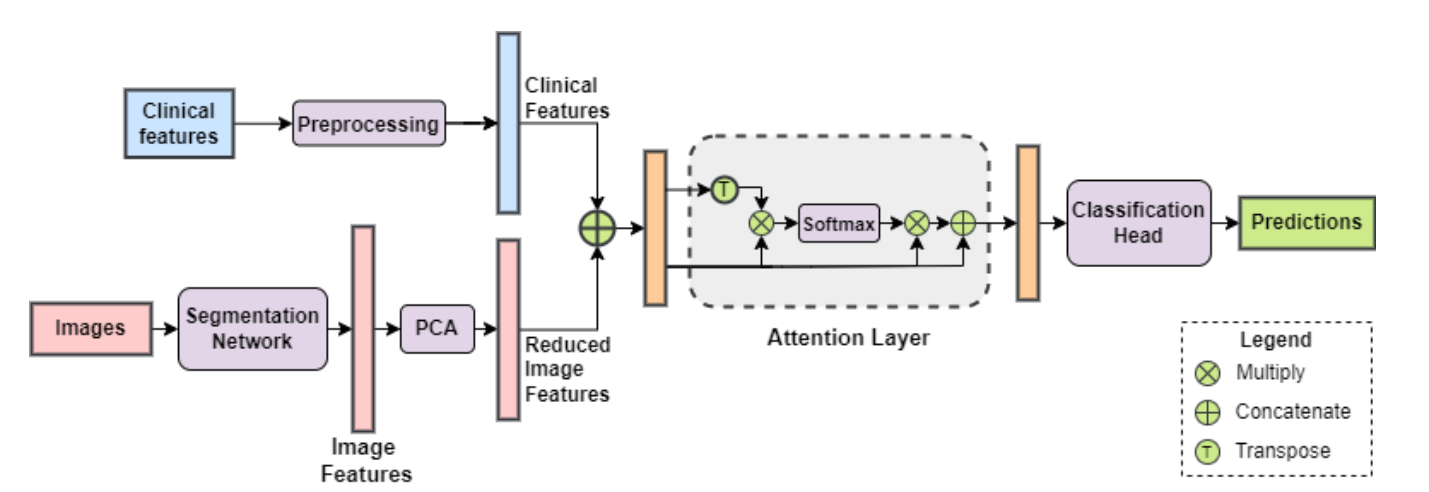

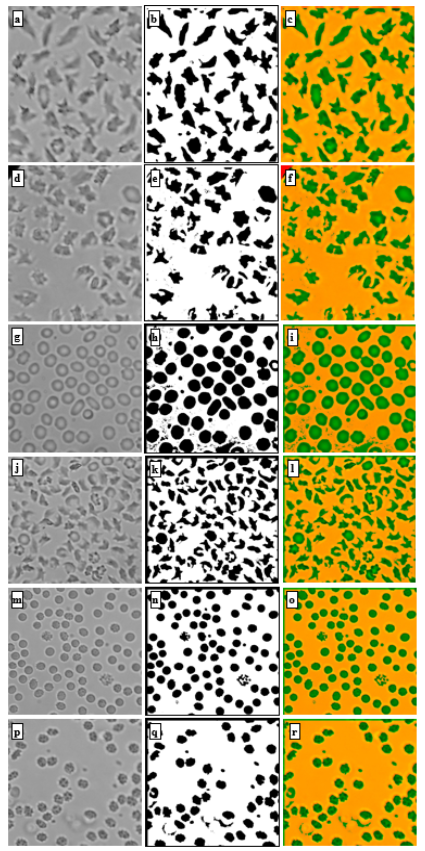

This paper introduces HAI-score, a robust AI-driven approach for quantifying HER2 H-scores from IHC-stained breast cancer samples, providing a reliable alternative to manual scoring and FDA-approved assays. Read more